Back, after an unwelcome hiatus. I’ve learnt my lesson though, and will be backing my wordpress database up. Regularly.

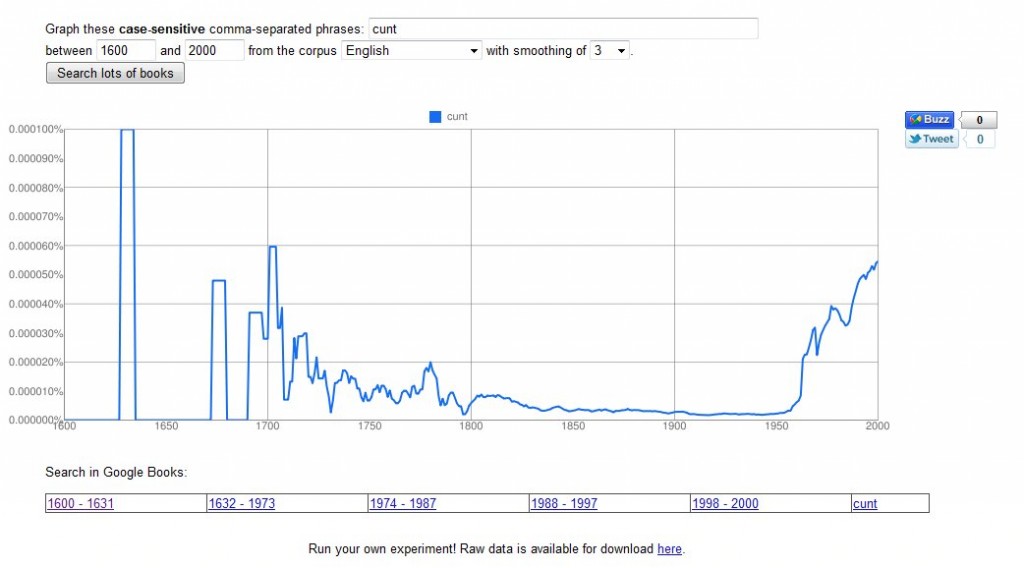

Anyway, being a linguist of the sweary variety, I was intrigued to see someone on twitter use Google lab’s ngram viewer to look at cunt and express surprise and delight that cunt was being used so frequently rather earlier than expected.

I thought the graph looked interesting. The frequency of cunt was rather erratic: an isolated big peak in around 1625-35; an isolated smaller peak in around 1675; peaks in 1690ish and 1705ish; a rather spiky presence between 1705 and 1800; then fairly consistently low frequency until around 1950 when its frequency increases again.

This seemed puzzling – rather than being fairly low-level but present, there were these huge spikes in the 17th century. I decided to have a look at the texts themselves. These turned out to be in Latin, and the following image rather neatly illustrates the two different meanings at work here:

The books themselves seem to be religious texts written in Latin, even if Google’s ever-helpful advertising algorithm seems to interpret things rather differently. As you can see in the first image, I selected texts from the English corpus. It’s possible that the books are assigned a corpus based on their place of publication, but it’s not very intuitive.

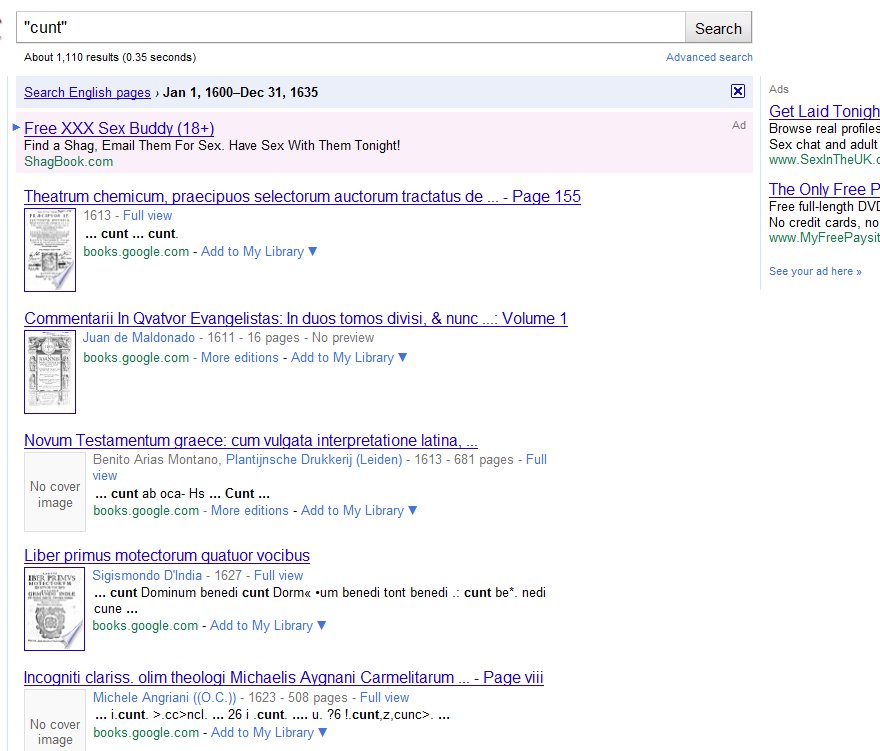

I took a closer look at the texts to try and work out what was going on. Some of the texts were in Latin, as this example taken from De paradiso voluptatis quem scriptura sacra Genesis secundo et tertio capite:

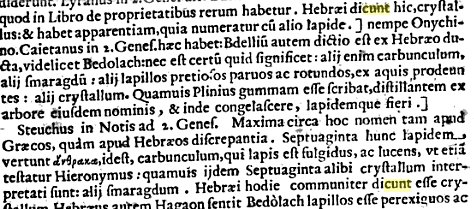

However, this was not the only issue. I found at least one example of a musical score – this example taken from Liber primus motectorum quatuor vocibus:

Here, the full lexical item is benedicunt. In both of these examples, cunt is not a full lexical item; I can understand why the layout of the score might have led to it being parsed as a separate item, but I’m a bit confused why the same seems to have happened with dicunt.

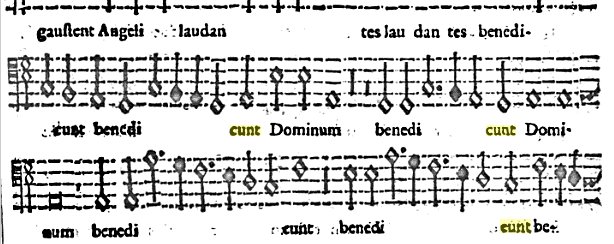

The high frequency of cunt can also be attributed to Optical Character Recognition (OCR). Basically, the text is scanned and a computer program tries to convert the images into text. This has varying degrees of accuracy – it can be very good, but things like size and font of print, the paper it was printed on and age of the texts all have an effect. The text obtained through scanning with OCR is then linked to the image.

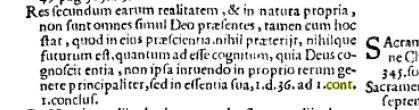

This example, taken from Incogniti clariss. olim theologi Michaelis Aygnani Carmelitarum Generalis, is probably familiar to those working with OCR scanned texts. The text actually reads cont. but the OCR has read it as cunt. The search program can’t read the image files; all it has to go on are OCR scanned texts. When these aren’t accurate, you get results like these.

I think Google ngram is interesting, but with some caveats. Corpora can be tiny – the researcher can have read every single text in their corpus and know it inside-outside. Corpora can be large and highly structured, like the British National Corpus. Corpora can be large and the researcher doesn’t need to have read every single text contained in them, but through careful compilation the researcher knows where the texts have come from, where they were published and so on – for example, corpora assembled through LexisNexis. This is a bit different – it’s not really clear what’s even in the collection of texts and the researcher has to trust that Google has put the right texts in the right language section. I’ve seen Google ngrams being used to gauge relative frequencies or two or more phrases, but for now I think I’ll stick to more traditional corpora for most in-depth work.

Mark Davis also has a post comparing the Corpus of Historical American English with Google Books/Culturomics. His post is in-depth, interesting and systematic; I just swear a lot. You should probably read his.

My students were very surprised last semester when they saw cunt being used in various texts. These weren’t the texts we were specifically looking at, but other contemporary ones that I pointed out as helpful.

Interesting! Was it because the texts were in Latin or poor photocopying/scanning, or something else entirely?